Serverless Architecture Explained: How It Works and Why It Matters

Startups

The cloud revolution has hit a critical tipping point—your infrastructure decisions now make or break both your engineering speed and bottom line. Today’s winning playbook for many major players is a blended approach: containers to manage your stateful workloads and complex systems, while serverless architecture handles your event triggers and innovation sandboxes. This blend optimizes performance, which means you can iterate, scale, and ultimately deliver value faster than your competitors.

We’ll break down the basics of serverless architecture, show you how to put it into action with practical patterns, and walk through considerations and limitations you may encounter.

What is serverless architecture?

Software applications have historically been built on a range of infrastructure—from physical servers and virtual machines to containers—following various architectural patterns. N-tier architectures (three-tier is the popular choice) are prevalent, but monolithic applications and service-oriented architectures (SOA) are also commonly used.

Computing resources in such traditional architectural models generally operate continuously regardless of application load, consuming resources even during periods of low utilization. The organization is required to provision infrastructure based on anticipated peak demand plus a safety margin, which often results in unused capacity during normal operations.

In contrast, in a serverless design pattern, computing resources are dynamically allocated only when functions execute and are released immediately afterward. As a result, you achieve a consumption-based cost model rather than paying for an always-on infrastructure.

Though the term “serverless” might suggest there are no servers, in reality, the servers are abstracted away from application development processes. Serverless architecture shifts the way we build software, based on three core concepts that differ significantly from traditional approaches.

- First is event-driven: everything happens in response to events. When a customer submits an order or an IoT device sends data, it triggers your code to run. Nothing further executes until something happens.

- Second: In a stateless environment, each piece of code works independently, without remembering previous requests. Every function execution starts afresh.

- Third is its ephemeral nature, meaning the compute resources appear only when needed and vanish immediately afterward. In some cases, they might exist for just milliseconds.

Why serverless?

Despite the benefits a serverless design offers, most organizations fail to factor in the unique constraints of serverless environments, and hence struggle with unexpected post-deployment challenges.

The allure of serverless lies in its promise of streamlined operations and cost efficiency. By abstracting away the complexities of server management, developers can focus solely on writing code, leading to faster development cycles and quicker time-to-market. Moreover, serverless architectures often translate to significant cost savings, as you only pay for the compute resources consumed during execution, eliminating the overhead of idle servers.

Despite these benefits, many organizations overlook the unique constraints of serverless environments, which can lead to unexpected challenges after deployment. For instance, cold start latencies, limited execution durations, and vendor-specific configurations can complicate performance optimization and debugging. Without proper planning, teams may find themselves grappling with issues like distributed tracing or managing state in a stateless environment. To fully leverage serverless advantages, it’s critical to anticipate these trade-offs and align the architecture with the application’s specific needs from the outset.

Components of serverless architecture

Serverless offers a fresh approach to building and deploying apps. Its five core components work together to give you resilient, scalable systems, freeing you from infrastructure management.

- Functions as the primary compute units

Functions provide on-demand computing that runs only when triggered. Unlike traditional <a href=”/products/cloud-servers/”>cloud servers</a> that run continuously, functions are activated when needed (for an HTTP request, a scheduled event, or database change), execute their task quickly, then shut down automatically. The cloud provider handles all infrastructure management.

Functions are fundamentally designed so they:

- Remain dormant until needed

- Execute briefly (milliseconds to minutes)

- Shut down automatically when finished

- Are fully managed by the cloud provider

The setup creates true pay-per-use billing, that is, you pay only for actual execution time, with no costs during idle periods. Most functions [typically in a Function-as-a-Service (FaaS)] run for just milliseconds to minutes, which makes them ideal for intermittent workloads but not recommended for constant processing.

- API gateways for request handling

API gateways form the intelligent request processing layer for handling the critical HTTP intermediary between clients and backend functions. The purpose of gateway services is primarily to transform raw HTTP requests into structured events that trigger downstream functions, though applying layered security is another key responsibility. Compared to a traditional setup, functions in a serverless model don’t need to implement HTTP servers or maintain persistent connections.

API gateways are built to:

- Receive HTTP requests from clients

- Route those requests to appropriate functions

- Handle authentication and authorization

- Manage request throttling and rate limiting

The gateway abstracts complex concerns, including request routing, payload transformation, and cross-cutting concerns like authentication—essentially functioning as a specialized reverse proxy optimized for serverless architectures.

- Managed services for persistence (databases, storage)

Serverless applications store data in fully managed services instead of self-maintained database servers. Unlike traditional architectures where you provision and manage database instances, a serverless model uses cloud-provided data services that require no infrastructure management.

4. Event sources and messaging systems

Event architecture enables component communication without direct dependencies. The key aspect is to implement a loosely coupled system leveraging publish-subscribe models, where publishers can emit events without knowledge of subscribers. Functions can then coordinate complex workflows without direct dependencies, ultimately facilitating independent deployment.

Event mechanisms:

- Generate standardized notifications

- Deliver those notifications to subscribed functions

- Maintain delivery guarantees

The pattern also simplifies deployments, since components can be updated independently without coordinating changes across the entire system. Most mature serverless setups evolve toward this event-driven model over time, especially when they scale beyond the basic level or require greater flexibility in their component relationships.

5. Authentication and authorization services

Authentication services are used to externalize identity verification and access control from application code. These specialized components implement OAuth flows, token validation, and fine-grained authorization rules. Rather than embedding security code in each function, these specialized components:

- Handle user registration and login

- Validate security tokens

- Check permissions against policies

- Support industry standards like OAuth

Commonly used serverless architecture patterns

With serverless, there are various architecture choices that can be factored in on how your functions run, how well your system scales, and your budget requirements.

Some common serverless architecture patterns include the following:

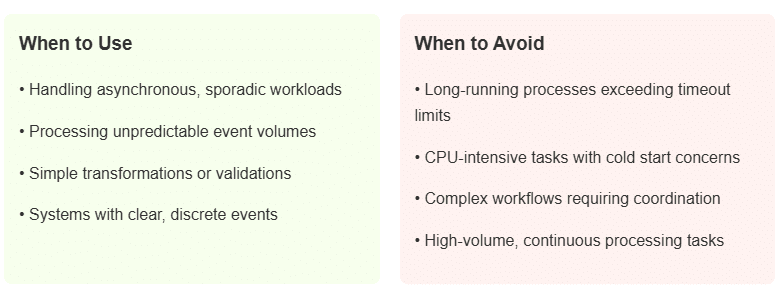

The event-processor pattern, the most basic serverless approach. Your functions simply respond to events from sources like API requests, messages, or database changes. This works great for handling unpredictable workloads that come in bursts. However, it’s not ideal for tasks that take a long time or need lots of processing power, since serverless platforms limit how long functions can run. Many teams start with this simple pattern before realizing they need something more sophisticated.

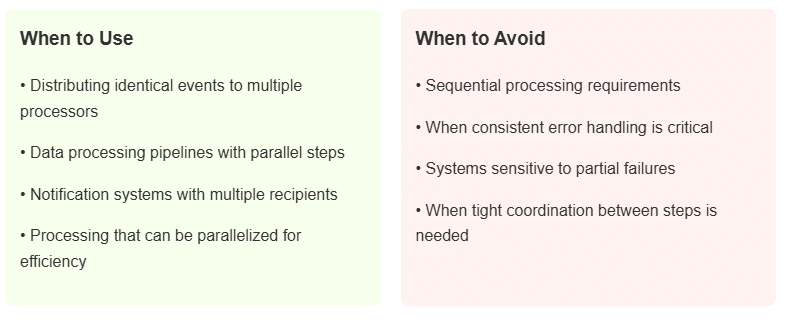

The fan-out pattern helps when one event needs to trigger many different processes at once. The event gets sent to a message system that can activate multiple functions simultaneously. The pattern is commonly chosen for processing data or sending notifications to many access points. The primary challenge is handling partial failures, when some functions succeed while others fail. This pattern can be used to analyze images. Typically, when you upload a photo, many functions simultaneously start to detect objects, faces, and text.

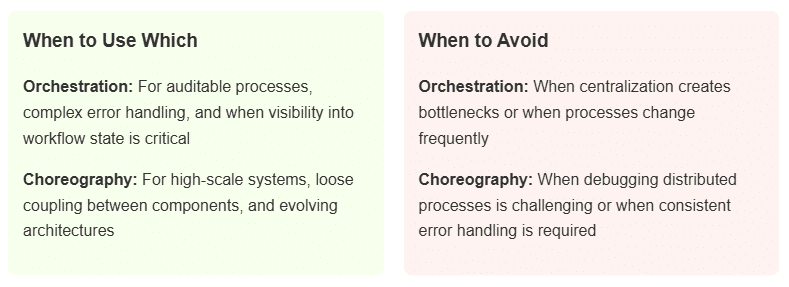

Orchestration versus choreography represents two ways to coordinate work. With orchestration, a central controller (like a state machine) directs the entire process step by step. With choreography, each function acts independently, based on events it receives. Financial companies often prefer orchestration because it makes tracking processes easier. High-traffic consumer apps, on the other hand, use choreography to avoid bottlenecks. Many enterprises use both: orchestration for critical business flows and choreography for everything else.

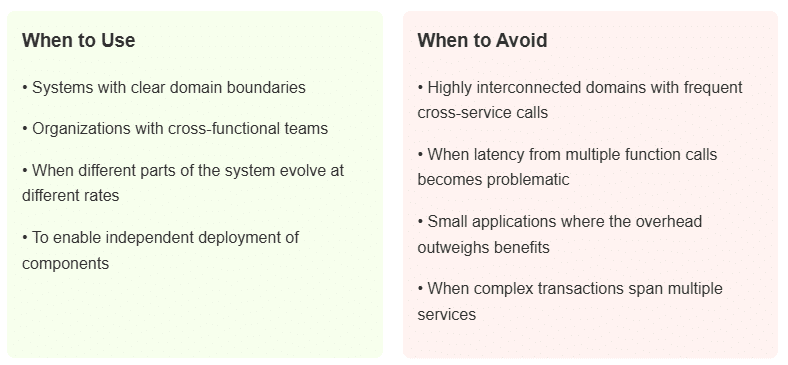

Serverless microservices group functions around specific business capabilities. Unlike traditional microservices, they don’t need servers running all the time. This framework is recommended when your business has clear boundaries between different areas, and your teams align with the boundaries. While separation looks good on architecture diagrams, note that real-world workflows can often cross domain boundaries repeatedly. In such cases, the cost and latency of many small function calls can add up quickly.

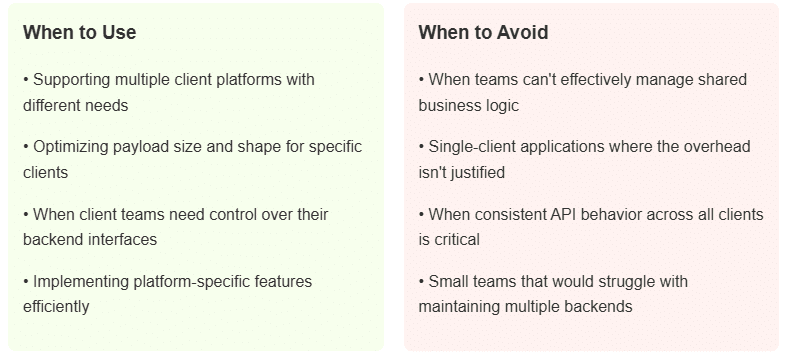

For systems with highly interconnected domains, the Backend for Frontend (BFF) pattern offers an effective solution by creating separate function layers for different client types. Instead of one API for everything, you build specialized backends for mobile apps, web browsers, or other interfaces. Many teams also implement BFFs as higher-level functions specifically designed around user journeys rather than individual domain operations. Although some consider code duplication across different backends as a potential drawback of the model, one solution involves implementing a thin BFF layer that primarily handles aggregation while delegating most business logic to the domain services.

Serverless considerations and constraints: What to know before you deploy

Serverless architectures transform the way you design systems, introducing initial constraints that demand thoughtful consideration. Beyond selecting the appropriate architectural pattern, consider the key factors that eventually determine independent scaling and deployment, tailored to your specific use case.

State management approaches

When choosing state approaches, consider access patterns first. If data needs consistent sub-50ms access, client-side or in-memory solutions would make sense. For more persistent storage needs, combination patterns work best—such as caching frequently accessed DynamoDB items in ElastiCache, or implementing write-through patterns where changes update both cache and persistent storage. If you have complex auditing requirements or need to address eventual consistency challenges, event sourcing provides significant advantages by storing immutable event logs rather than the current state.

Cold start mitigation

To address cold starts, focus first on runtime selection and package size. Node.js and Python functions initialize 3-5x faster than Java or .NET, due to runtime initialization overhead. Consider keeping deployment packages under 5MB by removing unnecessary dependencies and implementing tree-shaking during builds. For critical paths, provisioned concurrency eliminates cold starts entirely (though at an additional cost). Many teams also prefer to implement tiered warming strategies, such as using provisioned concurrency for user-facing functions, while allowing background processes to scale from zero.

Function boundary design

When designing function boundaries, optimize for data locality over theoretical domain purity. Functions requiring the same data should be packaged together regardless of domain concepts. If you have functions that frequently communicate or share significant data, package them as a single deployment unit to avoid cross-function call penalties. It is important to recognize that cross-function calls usually add 50-100ms latency compared to in-memory operations, subsequently impacting user experience in API-driven applications. If you have a monolithic data model that doesn’t cleanly separate, it may be more efficient to implement coarser-grained services, rather than forcing artificial boundaries that result in excessive cross-function communication.

Communication patterns

For function communication, consider using asynchronous patterns instead of synchronous calls whenever possible. If you have a new serverless implementation, you may want to start with direct function invocation for simplicity. As your system scales, consider gradually transitioning toward event-driven communication to better handle scaling challenges and simplify changes across services.

Security implementation

When securing serverless applications, focus on function-level permissions rather than service-level access. Each function should have its own IAM role with explicitly listed resources it can access, and should avoid wildcard permissions that expand attack surface. For managing configuration secrets, let your access patterns guide your technology choice.

Observability design

When implementing observability, standardize on correlation IDs passed through all system components. These identifiers enable tracing requests across function boundaries and asynchronous processing steps. Structure logs as JSON with consistent field names to simplify querying and analysis. If you have complex workflows spanning multiple functions, these correlation IDs become essential for tracing requests across both synchronous and asynchronous processing steps.

Final thoughts

Over the years, we have seen multiple case studies where serverless delivered extraordinary business outcomes. At the same time, there were many projects where adopting serverless architecture introduced crippling complexity and runaway costs.

The best serverless success stories are from organizations that use it as one tool in their toolkit, not as the solution to everything that goes wrong. These teams don’t try to force all their applications into a serverless model. Instead, they pick specific workloads where serverless truly shines.

As serverless technology gets better, some of today’s problems will disappear. Until then, it is important to make thoughtful choices about when to (or not to) use it.